- Memorandum

- Posts

- The AI Training Challenge For 2026

The AI Training Challenge For 2026

Post-training isn’t just a refinement step—it’s where AI’s real costs surface, forcing labs to scale domain expertise, not just compute.

Welcome to Memorandum Deep Dives. In this series, we go beyond the headlines to examine the forces quietly reshaping the AI economy. 🗞️

Today, we’re diving into a less visible constraint facing AI in 2026—not compute, not models, but people.

Over the past two years, AI labs raced to build ever larger systems, treating scale as the primary path to intelligence. But as models moved from pre-training to post-training, a harder problem emerged: who teaches these systems to reason correctly in medicine, law, finance, and science? As costs rise and talent pools thin, the industry is discovering that intelligence doesn’t scale without expertise—and that where this expertise lives may matter as much as how models are built.

AI teams need PhD-level experts for post-training, evaluation, and reasoning data. But the U.S. pipeline can’t keep up.

Meet Athyna Intelligence: a vetted Latin American PhD & Masters network for post-training, evaluation, and red-teaming.

Access vetted PhD experts, deep STEM knowledge, 40–60% savings, and U.S.-aligned collaboration.

*This is sponsored content. See our partnership options here.

AI’s Post-Training Shift: When Intelligence Needs Experts

The AI industry spent 2024 and 2025 racing to build bigger, faster models. In 2026, it's discovering that raw compute was not the only constraint.

There’s a major bottleneck in expertise. And it's forcing AI labs to rethink where they find the people who turn foundation models into tools that actually work.

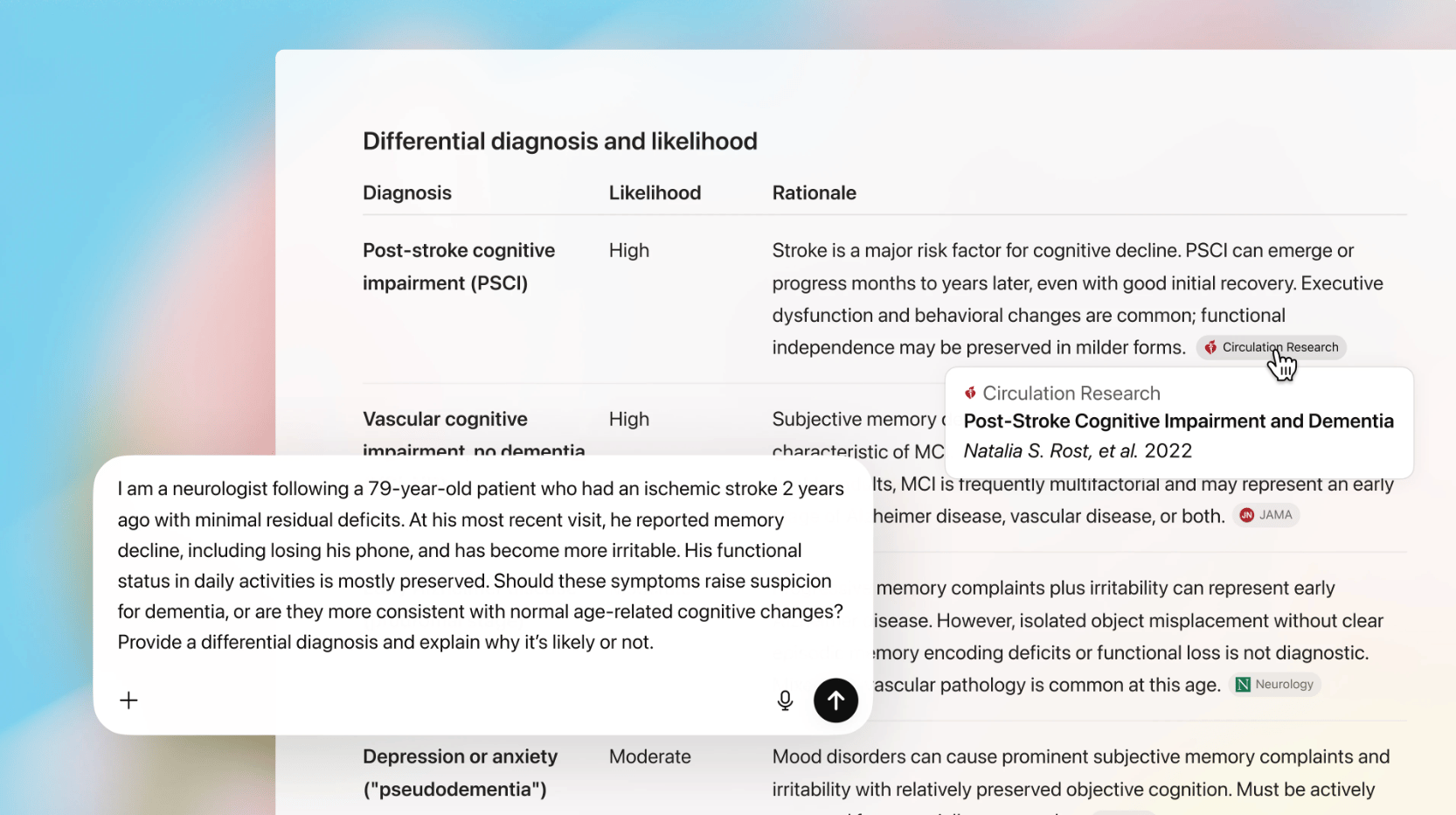

As AI systems move from pre-training to post-training—the phase where models learn to follow instructions, reason through problems, and adapt to specific domains—the work requires a fundamentally different kind of workforce. Not software engineers writing training loops, but domain experts who can evaluate whether a model's medical diagnosis makes clinical sense or whether its legal reasoning would hold up in court.

The problem is acute. Post-training demands professionals with deep specialization: radiologists who can spot diagnostic errors, computer scientists who can identify algorithmic flaws, researchers with PhDs in the exact domains where AI is being deployed.

The talent exists, but it's stretched thin. And most AI companies are competing for the same limited pool, overlooking regions with the expertise and capacity to deliver at scale.

The talent crunch by the numbers

The World Economic Forum reports that 94% of C-suite executives already face critical AI skill shortages, with one in three saying those gaps exceed 40% of what they need. McKinsey's labor-market data shows roles requiring explicit AI fluency growing rapidly across the U.S. workforce, with more teams competing for the same scarce talent simultaneously.

LinkedIn's 2026 Jobs on the Rise report makes the shift concrete. AI Engineer, AI Consultant, and AI/ML Researcher now rank among the five fastest-growing roles in the United States—positions that were marginal or nonexistent just a few years ago. Also in the top five: Data Annotators, the frontline workers who label and review data to train AI models.

The Atlanta Fed's analysis reveals where the demand is concentrating. AI skill requirements are spiking not just in computer and mathematical occupations, but across healthcare practitioners, legal professionals, life scientists, and business analysts—fields that require years of specialized training beyond technical AI knowledge.

But these numbers mask a deeper problem. The shortage isn't just about AI engineers or data scientists—roles where bootcamps and university programs are ramping up supply. It's about domain experts who can evaluate whether AI outputs are actually correct in high-stakes fields. That's a fundamentally different talent pool, and one that can't be trained in a few months.

From scale to specialization

For years, AI training followed a predictable pattern. Teams collected massive datasets, ran them through enormous clusters of GPUs, and let the models absorb statistical patterns. The work was technical, expensive, and largely automated once the infrastructure was in place.

Post-training changed that. Reinforcement Learning from Human Feedback fundamentally shifted AI development from a purely technical exercise to one requiring deep domain knowledge. Unlike earlier supervised learning approaches where workers could label images or transcribe audio without subject-matter expertise, modern AI systems need experts who understand why something is correct, not just what looks right.

A generic data labeler can tag a stop sign in an image. But medical AI demands radiologists who recognize the difference between benign calcifications and early-stage cancer. Distinctions that require years of clinical training to internalize. Code generation models need computer scientists who can identify whether an algorithm is actually optimal or just superficially correct. Financial compliance systems miss critical edge cases when regulatory experts aren't involved in training and review.

The result is a widening gap between what AI can demonstrate in controlled settings and what it can reliably deliver in the real world. Without domain experts in the loop, AI remains impressive to watch but difficult to trust.

Why hiring breaks at scale

The economics reveal the problem's depth. Hiring PhDs and Master's graduates across Computer Science, Mathematics, Physics, Law, Biology, and Healthcare costs tens of thousands of dollars per hire. Scale that across the number of experts needed to train specialized models, and budgets spiral into the hundreds of thousands or millions.

These pressures have forced AI labs into an uncomfortable tradeoff: either spend heavily on hiring and retaining domain experts, or rely on cheaper, generic annotators who lack the expertise to catch subtle errors.

The economics of domain expertise illustrate why this is so difficult. Radiologists typically earn between $300,000 and $500,000 annually reading scans in clinical settings. Research scientists at major tech companies earn $250,000 to $450,000 working on production systems and research problems. Asking them to spend evenings labeling training data for a fraction of that pay rarely works. A few participate out of curiosity or belief in AI's potential, but they're the exception. Most are already stretched thin doing the work AI is meant to support.

What this reveals is not a lack of expertise, but a mismatch in where and how it's applied. The talent exists. It's just optimally deployed in high-pressure, in-person environments rather than in annotation tasks for AI teams.

Outperform the competition.

Business is hard. And sometimes you don’t really have the necessary tools to be great in your job. Well, Open Source CEO is here to change that.

Tools & resources, ranging from playbooks, databases, courses, and more.

Deep dives on famous visionary leaders.

Interviews with entrepreneurs and playbook breakdowns.

Are you ready to see what’s all about?

*This is sponsored content

The geography solution

The mismatch isn't talent, it's location. Radiologists exist. Computer scientists exist. Physicists exist. PhD-level researchers in biology, mathematics, and specialized domains exist. They're just not optimally distributed for the way AI companies are hiring.

What this reveals is that expertise has always been global. A radiologist in São Paulo interprets imaging studies using the same diagnostic principles as one in Boston. A computer scientist in Buenos Aires approaches algorithms, systems architecture, and optimization problems with the same rigor as one in Cambridge. A physicist in Santiago understands thermodynamics, quantum mechanics, and computational modeling the same way one in San Francisco does.

While North American AI labs compete for the same pool of experts, Latin America has been quietly building depth in exactly the fields post-training demands.

For AI labs, this means expanding their expert workforce beyond local markets at economics that allow them to reinvest savings into compute infrastructure and model development. It's not about replacing expensive U.S. talent—it's scaling domain expertise to match the volume of post-training work modern AI systems require.

The gap isn't one of capability. It's one of connection. AI companies willing to think globally and hire globally can embed expert judgment into their models from the start, turning domain knowledge from a bottleneck into an advantage

When expertise becomes infrastructure

The shift to post-training as the primary value driver in AI development has made domain expertise as critical as compute. AI labs have spent billions building data centers and securing chip supply. The ones that unlock new talent pools for expert labor will have a decisive advantage.

That advantage matters most when models move beyond general-purpose capabilities. Post-training requires distinguishing between clinically sound reasoning and plausible-sounding errors, between optimal algorithms and code that merely compiles, or between statistically valid analysis and calculations that look superficially correct.

Latin America's talent pool offers a way to scale that capability. Not as a cost-cutting measure, but as a strategic shift in how AI labs think about where expertise lives and how it scales. With tens of thousands of STEM PhD and Master's graduates entering the workforce annually across medicine, computer science, physics, and engineering, the region provides the depth and volume post-training demands.

For AI labs, the question isn't whether to look beyond traditional tech hubs. It's whether they can afford not to. As models get more specialized and post-training costs rise, access to distributed expertise stops being optional and starts looking like infrastructure.

The industry spent two years learning that compute alone doesn't solve intelligence. It may spend the next two learning that intelligence without expertise doesn't solve problems. The labs that figure out how to access domain knowledge at scale—regardless of geography—will have an edge that raw model size can't replicate.

P.S. Want to collaborate?

Here are some ways.

Share today’s news with someone who would dig it. It really helps us to grow.

Let’s partner up. Looking for some ad inventory? Cool, we’ve got some.

Deeper integrations. If it’s some longer form storytelling you are after, reply to this email and we can get the ball rolling.

What did you think of today's memo? |